Meaningful model comparisons have to include reasonable competing models and also all data: A rejoinder to Rieskamp (in press)

Abstract

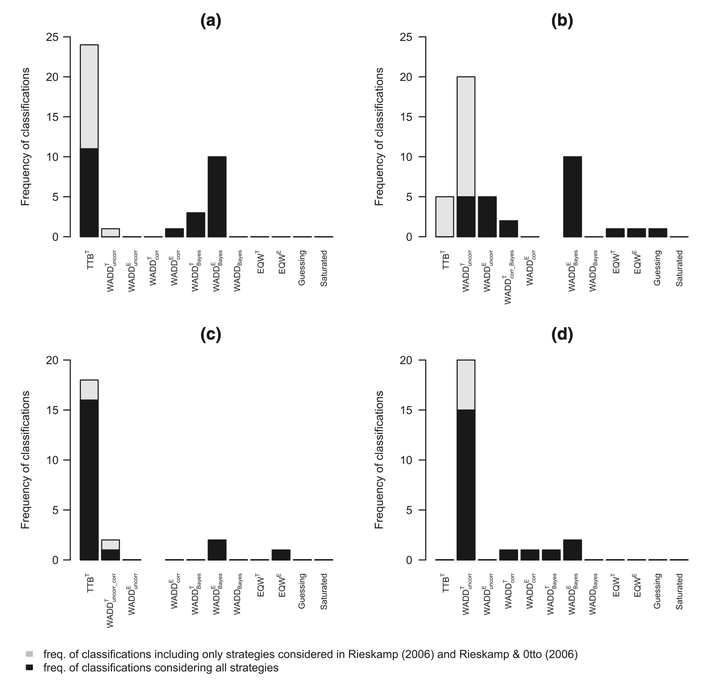

Proper methods for model comparisons require including reasonable models and all data. In an article in this issue, we highlight that excluding some models was common in parts of the literature supposedly supporting fast-and-frugal heuristics and show in comprehensive simulations and exemplified reanalyses of studies by Rieskamp that this can—under specific conditions—lead to wrong conclusions. In a comment in this issue, Rieskamp provides no conclusive arguments against these basic principles of proper methodology. Hence, the principles to include (i) all reasonable competing models and (ii) all data still stand and have to remain the standards for evaluating past and future empirical work. Rieskamp addresses the first of the two issues in a Bayesian analysis. He, however, violates the second principle by omitting data to be able to uphold his previous conclusion, which invalidates his results. We explain in more detail why exclusion of data leads to biased estimates in this context and provide a refined Bayesian analysis—of course based on all data—that underlines our previously raised concerns about the criticized studies. Instead of arguing against the two basic principles of model comparison, we recommend authors of affected studies to conduct proper reanalyses including all models and data.